Hadoop is an open-source framework that allows to store and process big

data in a distributed environment across clusters of computers using

simple programming models. It is designed to scale up from single

servers to thousands of machines, each offering local computation and

storage.

Hadoop

is an Apache open source framework written in java that allows

distributed processing of large datasets across clusters of computers

using simple programming models. A Hadoop frame-worked application

works in an environment that provides distributed storage and

computation across clusters of computers. Hadoop is designed to scale

up from single server to thousands of machines, each offering local

computation and storage.

Hadoop

Architecture

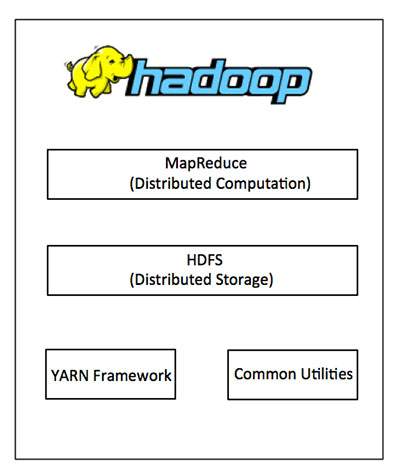

Hadoop

framework includes following four modules:

Hadoop

Common: These are Java libraries and utilities required by other

Hadoop modules. These libraries provides filesystem and OS level

abstractions and contains the necessary Java files and scripts

required to start Hadoop.

Hadoop

YARN: This is a framework for job scheduling and cluster resource

management.

Hadoop

Distributed File System (HDFS™): A distributed file system that

provides high-throughput access to application data.

Hadoop

MapReduce: This is YARN-based system for parallel processing of

large data sets.

We

can use following diagram to depict these four components available

in Hadoop framework.

Since

2012, the term "Hadoop" often refers not just to the base

modules mentioned above but also to the collection of additional

software packages that can be installed on top of or alongside

Hadoop, such as Apache Pig, Apache Hive, Apache HBase, Apache Spark

etc.

MapReduce

Hadoop

MapReduce is a software framework for easily writing applications

which process big amounts of data in-parallel on large clusters

(thousands of nodes) of commodity hardware in a reliable,

fault-tolerant manner.

The

term MapReduce actually refers to the following two different tasks

that Hadoop programs perform:

The

Map Task: This is the first task, which takes input data and

converts it into a set of data, where individual elements are broken

down into tuples (key/value pairs).

The

Reduce Task: This task takes the output from a map task as input and

combines those data tuples into a smaller set of tuples. The reduce

task is always performed after the map task.

Typically

both the input and the output are stored in a file-system. The

framework takes care of scheduling tasks, monitoring them and

re-executes the failed tasks.

The

MapReduce framework consists of a single master JobTracker and one

slave TaskTracker per cluster-node. The master is responsible for

resource management, tracking resource consumption/availability and

scheduling the jobs component tasks on the slaves, monitoring them

and re-executing the failed tasks. The slaves TaskTracker execute the

tasks as directed by the master and provide task-status information

to the master periodically.

The

JobTracker is a single point of failure for the Hadoop MapReduce

service which means if JobTracker goes down, all running jobs are

halted.

Hadoop

Distributed File System

Hadoop

can work directly with any mountable distributed file system such as

Local FS, HFTP FS, S3 FS, and others, but the most common file system

used by Hadoop is the Hadoop Distributed File System (HDFS).

The

Hadoop Distributed File System (HDFS) is based on the Google File

System (GFS) and provides a distributed file system that is designed

to run on large clusters (thousands of computers) of small computer

machines in a reliable, fault-tolerant manner.

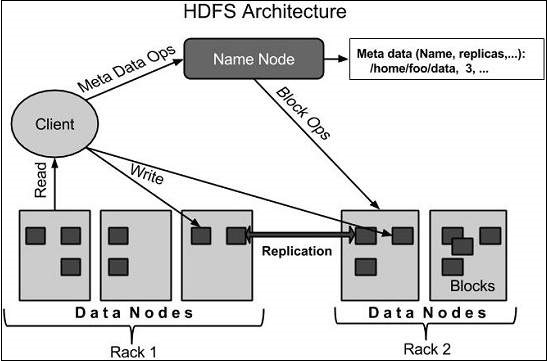

HDFS

uses a master/slave architecture where master consists of a single

NameNode that manages the file system metadata and one or more slave

DataNodes that store the actual data.

A

file in an HDFS namespace is split into several blocks and those

blocks are stored in a set of DataNodes. The NameNode determines the

mapping of blocks to the DataNodes. The DataNodes takes care of read

and write operation with the file system. They also take care of

block creation, deletion and replication based on instruction given

by NameNode.

HDFS

provides a shell like any other file system and a list of commands

are available to interact with the file system. These shell commands

will be covered in a separate chapter along with appropriate

examples.

How

Does Hadoop Work?

Stage

1

A

user/application can submit a job to the Hadoop (a hadoop job client)

for required process by specifying the following items:

The

location of the input and output files in the distributed file

system.

The

java classes in the form of jar file containing the implementation

of map and reduce functions.

The

job configuration by setting different parameters specific to the

job.

Stage

2

The

Hadoop job client then submits the job (jar/executable etc) and

configuration to the JobTracker which then assumes the responsibility

of distributing the software/configuration to the slaves, scheduling

tasks and monitoring them, providing status and diagnostic

information to the job-client.

Stage

3

The

TaskTrackers on different nodes execute the task as per MapReduce

implementation and output of the reduce function is stored into the

output files on the file system.

Advantages

of Hadoop

Hadoop

framework allows the user to quickly write and test distributed

systems. It is efficient, and it automatic distributes the data and

work across the machines and in turn, utilizes the underlying

parallelism of the CPU cores.

Hadoop

does not rely on hardware to provide fault-tolerance and high

availability (FTHA), rather Hadoop library itself has been designed

to detect and handle failures at the application layer.

Servers

can be added or removed from the cluster dynamically and Hadoop

continues to operate without interruption.

Another

big advantage of Hadoop is that apart from being open source, it is

compatible on all the platforms since it is Java based.